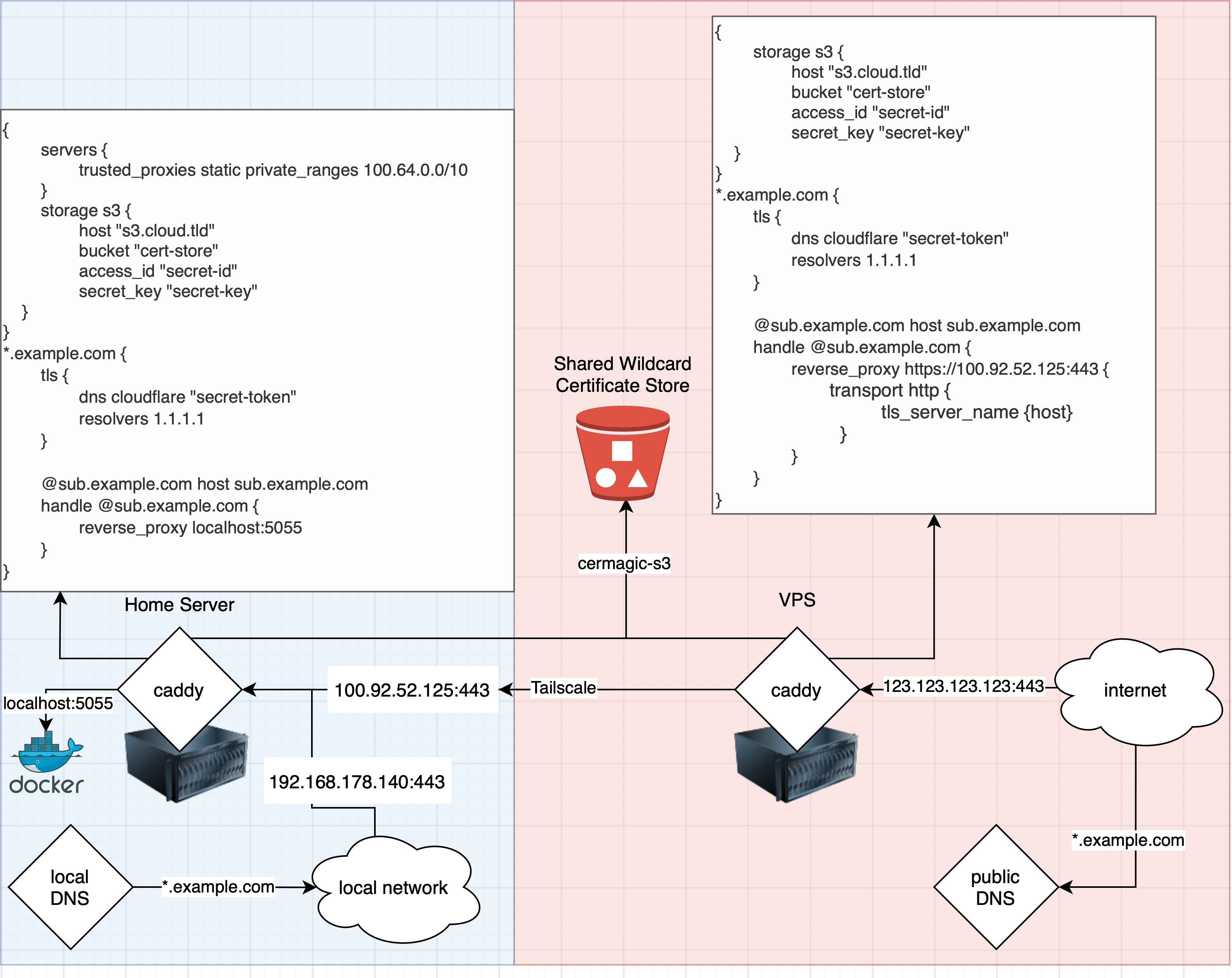

I am running a local beefy server which runs multiple Docker containers, a media server and more in my local network. In order to access the media server from the internet, I am renting a really cheap VPS which allows family and friends outside of my home network to access my media server from wherever they are.

On that external VPS I am running a Caddy revserve proxy and a Tailscale agent. On my local server I am also running a Tailscale agent and another Caddy reverse proxy which points to my local Docker containers.

My Tailscale network is configured to allow access from the VPS IP to the internal server IP:

{

"acls": [

{

"action": "accept",

"src": ["100.95.79.40"], // VPS IP

"dst": ["100.92.52.125:443"] // server IP

}

]

}

My AdGuard Home DNS server and PiHole alternative contains a few DNS rewrites in order to resolve my public domain and subdomains internally (192.168.178.140).

Additionally I prevent all internal devices from sending outbound DNS traffic to the internet, except for the DNS server itself. Due to DoT (DNS over TLS) and DoH (DNS over HTTPS) it has become quite hard to prevent devices from sending DNS queries to the internet, tho.

Both caddy instances are built with a few additional modules in order to enable the DNS challenge via Cloudflare for my wildcard certificates and in order to enable an additional storage backend for storing those wildcard certificates in an S3 bucket that is publicly accessible from the internet (but password protected).

This is my Dockerfile for building a custom Caddy server:

FROM caddy:builder AS builder

RUN xcaddy build \

--with github.com/caddy-dns/cloudflare \

--with github.com/ss098/certmagic-s3

FROM caddy:latest

COPY --from=builder /usr/bin/caddy /usr/bin/caddy

Here is my docker-compose.yaml file for configuring the Caddy container which lies directly next to the Dockerfile:

services:

caddy:

container_name: caddy

build: .

restart: unless-stopped

network_mode: "host"

cap_add:

- NET_ADMIN

volumes:

- ./Caddyfile:/etc/caddy/Caddyfile:ro

# autosafe config

- ./volumes/config:/config/caddy

# certificates

- ./volumes/data:/data/caddy

# logs

- ./volumes/logs:/var/log/caddy

# webroot

- ./volumes/www:/var/www

My Makefile for building the Docker image looks like this:

.PHONY : start stop update rebuild restart logs

restart: update

update: rebuild

rebuild: stop

docker compose pull

docker compose up --build --force-recreate -d

docker image prune -f

docker compose logs -f caddy

start:

docker compose up -d

stop:

docker compose down

logs:

docker compose logs -f caddy

And last but not least, both Caddyfiles, one for the VPS and one for the local server.

Internal Caddyfile (local server):

{

servers {

# Trust private ips and additionally Tailscale ips

trusted_proxies static private_ranges 100.64.0.0/10

}

# Enable the S3 storage module for storing certificates

storage s3 {

host "s3.cloud.tld"

bucket "cert-store"

access_id "secret-id"

secret_key "secret-key"

}

}

*.example.com {

tls {

# Use the Cloudflare DNS challenge for wildcard certificates

dns cloudflare "secret-token"

resolvers 1.1.1.1

}

@sub.example.com host sub.example.com

handle @sub.example.com {

# Reverse proxy to the local Docker container

# (bound to 127.0.0.1:5055:5055)

# which prevents external access to the container

reverse_proxy localhost:5055

}

}

External Caddyfile (VPS):

{

storage s3 {

host "s3.cloud.tld"

bucket "cert-store"

access_id "secret-id"

secret_key "secret-key"

}

}

*.example.com {

tls {

dns cloudflare "secret-token"

resolvers 1.1.1.1

}

@sub.example.com host sub.example.com

handle @sub.example.com {

reverse_proxy https://100.92.52.125:443 {

transport http {

tls_server_name {host}

}

}

}

}

And this is how the whole picture finally looks like: